It was that computing energy trickled down from hulking supercomputers to the chips in our pockets.

Over the previous 15 years, innovation has modified course: GPUs, born from gaming and scaled by way of accelerated computing, have surged upstream to remake supercomputing and carry the AI revolution to scientific computing’s most rarefied methods.

JUPITER at Forschungszentrum Jülich is the logo of this new period.

Not solely is it among the many most effective supercomputers — producing 63.3 gigaflops per watt — nevertheless it’s additionally a powerhouse for AI, delivering 116 AI exaflops, up from 92 at ISC Excessive Efficiency 2025.

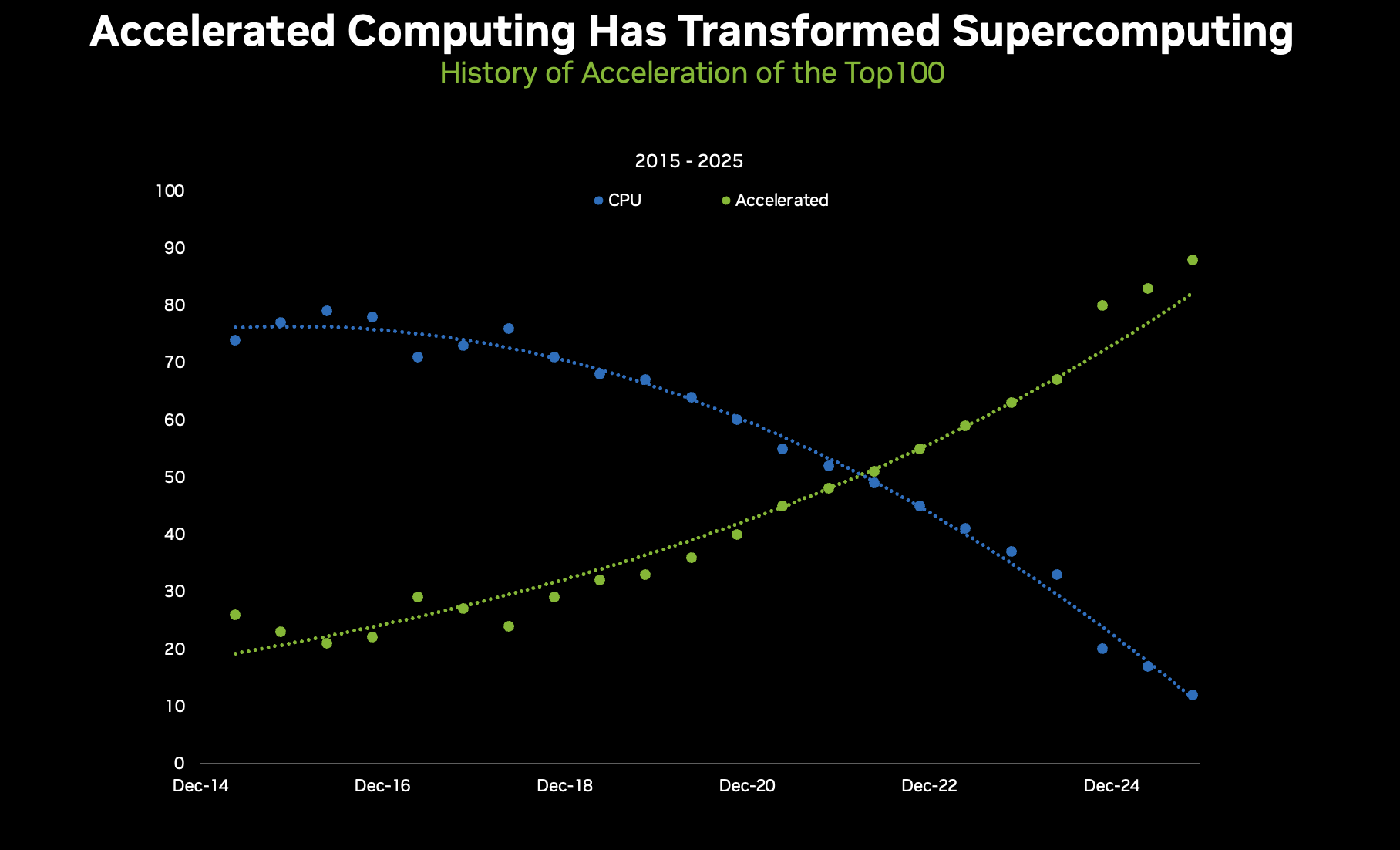

That is the “flip” in motion. In 2019, almost 70% of the TOP100 high-performance computing methods have been CPU-only. As we speak, that quantity has plunged under 15%, with 88 of the TOP100 methods accelerated — and 80% of these powered by NVIDIA GPUs.

Throughout the broader TOP500, 388 methods, 78%, now use NVIDIA expertise, together with 218 GPU-accelerated methods (up 34 methods yr over yr) and 362 methods linked by high-performance NVIDIA networking. The pattern is unmistakable: accelerated computing has develop into the usual.

However the actual revolution is in AI efficiency. With architectures like NVIDIA Hopper and Blackwell and methods like JUPITER, researchers now have entry to orders of magnitude extra AI compute than ever.

AI FLOPS have develop into the brand new yardstick, enabling breakthroughs in local weather modeling, drug discovery and quantum simulation — issues that demand each scale and effectivity.

At SC16, years earlier than at this time’s generative AI wave, NVIDIA founder and CEO Jensen Huang noticed what was coming. He predicted that AI would quickly reshape the world’s strongest computing methods.

“A number of years in the past, deep studying got here alongside, like Thor’s hammer falling from the sky, and gave us an extremely highly effective software to unravel a few of the most tough issues on the planet,” Huang declared.

The maths behind computing energy consumption had already made the shift to GPUs inevitable.

Nevertheless it was the AI revolution, ignited by the NVIDIA CUDA-X computing platform constructed on these GPUs, that prolonged the capabilities of those machines dramatically.

Out of the blue, supercomputers may ship significant science at double precision (FP64) in addition to at blended precision (FP32, FP16) and even at ultra-efficient codecs like INT8 and past — the spine of contemporary AI.

This flexibility allowed researchers to stretch energy budgets additional than ever to run bigger, extra advanced simulations and prepare deeper neural networks, all whereas maximizing efficiency per watt.

However even earlier than AI took maintain, the uncooked numbers had already pressured the difficulty. Energy budgets don’t negotiate. Supercomputer researchers — inside NVIDIA and throughout the group — have been coming to grips with the street forward, and it was paved with GPUs.

To achieve exascale and not using a Hoover Dam‑sized electrical invoice, researchers wanted acceleration. GPUs delivered way more operations per watt than CPUs. That was the pre‑AI inform of what was to come back, and that’s why when the AI increase hit, large-scale GPU methods already had momentum.

The seeds have been planted with Titan in 2012 on the Oak Ridge Nationwide Laboratory, one of many first main U.S. methods to pair CPUs with GPUs at unprecedented scale — exhibiting how hierarchical parallelism may unlock enormous utility good points.

In Europe in 2013, Piz Daint set a brand new bar for each efficiency and effectivity, then proved the purpose the place it issues: actual purposes like COSMO forecasting for climate prediction.

By 2017, the inflection was plain. Summit at Oak Ridge Nationwide Laboratory and Sierra at Lawrence Livermore Laboratory ushered in a brand new commonplace for management‑class methods: acceleration first. They didn’t simply run sooner; they modified the questions science may ask for local weather modeling, genomics, supplies and extra.

These methods are capable of do rather more with a lot much less. On the Green500 checklist of probably the most environment friendly methods, the highest eight are NVIDIA‑accelerated, with NVIDIA Quantum InfiniBand connecting 7 of the High 10.

However the story behind these headline numbers is how AI capabilities have develop into the yardstick: JUPITER delivers 116 AI exaflops alongside 1 EF FP64 — a transparent sign of how science now blends simulation and AI.

Energy effectivity didn’t simply make exascale attainable; it made AI at exascale sensible. And as soon as science had AI at scale, the curve bent sharply upward.

What It Means Subsequent

This isn’t nearly benchmarks. It’s about actual science:

- Sooner, extra correct climate and local weather fashions

- Breakthroughs in drug discovery and genomics

- Simulations of fusion reactors and quantum methods

- New frontiers in AI-driven analysis throughout each self-discipline

The shift began as a power-efficiency crucial, turned an architectural benefit and has matured right into a scientific superpower: simulation and AI, collectively, at unprecedented scale.

It begins with scientific computing. Now, the remainder of computing will observe.